SERVERS

Discover the architecture that powers large language models like ChatGPT by integrating our servers, equipped with NVIDIA’s most powerful GPUs, into your business processes.

OpenZeka – NVIDIA DGX AI Compute Systems Partner

GPU Servers are designed for artificial intelligence, high-performance computing (HPC), data science, and advanced analytics. While PCIe-based solutions provide cost efficiency and broad compatibility, SXM-based DGX and HGX systems deliver superior performance for large-scale AI training with NVLink and NVSwitch technologies, offering low latency and high bandwidth. The SXM architecture forms the backbone of modern large language models such as ChatGPT, Claude, Gemini, and LLaMA.

| DGX Solutions | HGX Solutions | PCIe Solutions | |

|---|---|---|---|

| Description | GPU servers optimized end-to-end by NVIDIA, combining GPU, CPU, memory, storage, and networking. | Flexible GPU servers offered by OEM vendors, built on NVIDIA’s SXM GPU and NVSwitch infrastructure, with additional CPU, memory, storage, and networking components. | Cost-effective and compatible servers using standard PCIe GPU cards. |

| Manufacturer | NVIDIA | OEM vendors (HGX platform provided by NVIDIA) | OEM vendors (Dell, HP, Supermicro, Gigabyte, etc. |

| Performance | Direct GPU-to-GPU communication ensures low latency and high bandwidth for maximum performance. | Direct GPU-to-GPU communication ensures low latency and high bandwidth for maximum performance. | Since GPUs communicate over the PCIe bus, performance is lower compared to DGX and HGX solutions. |

| Flexibility | Limited flexibility, as NVIDIA delivers fully configured systems with fixed hardware setups. | OEM vendors can offer various combinations of CPU, RAM, storage, and networking. The number of GPUs can vary depending on the model (e.g., 4 or 8 SXM GPUs). | High flexibility—GPUs can be added or removed easily thanks to PCIe design, and systems can be scaled according to specific needs. |

| Scalability | NVSwitch + InfiniBand enable scaling up to thousands of GPUs. | NVSwitch + InfiniBand enable scaling up to thousands of GPUs. | Typically limited to 4–8 GPUs within a single server. |

| Use Cases | Large language models, HPC, advanced research. | Large language models, HPC, advanced research, and customized enterprise solutions. | Mid-scale training, inference, data analytics, and visualization. |

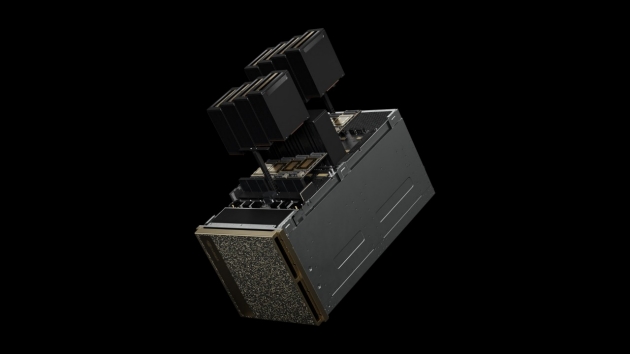

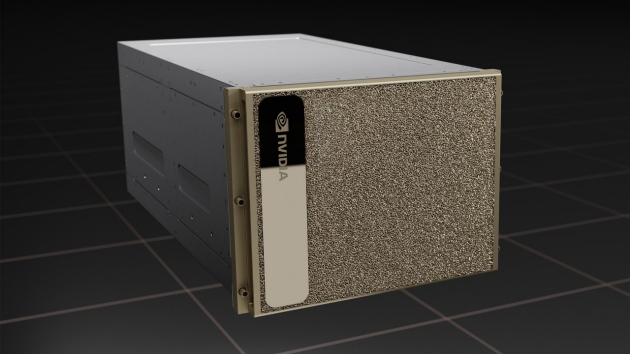

DGX SOLUTIONS

DGX Solutions are GPU servers fully integrated by NVIDIA, optimized for AI and HPC. With easy deployment and maximum performance, they are ready to be applied directly to large-scale workloads.

HGX SOLUTIONS

HGX Solutions are built by integrating NVIDIA’s HGX platform into server infrastructures by OEM vendors. This creates flexible and high-performance GPU servers that can be adapted to various workloads such as AI training, HPC, and data analytics.

| HGX B300 | HGX B200 | HGX H200 | ||

|---|---|---|---|---|

| 4-GPU | 8-GPU | |||

| Form Factor | 8x NVIDIA Blackwell Ultra SXM | 8x NVIDIA Blackwell SXM | 4x NVIDIA H200 SXM | 8x NVIDIA H200 SXM |

| FP4 Tensor Core | 144 PFLOPS | 108 PFLOPS | 144 PFLOPS | 72 PFLOPS | 16 PFLOPS | 32 PFLOPS |

| TF32 Tensor Core | 18 PFLOPS | 18 PFLOPS | 4 PFLOPS | 8 PFLOPS |

| Total Memory | Up to 2.1 TB | 1.4 TB | 564 GB HBM3e | 1.1 TB HBM3e |

| Networking Bandwidth | 1.6 TB/s | 0.8 TB/s | 0.4 TB/s | 0.8 TB/s |

PCIe SOLUTIONS

PCIe Solutions are cost-effective and flexible server solutions that use standard PCIe GPU cards. Thanks to their high compatibility, they can be easily integrated with different systems and deliver balanced performance for mid-scale AI training, inference, data analytics, and visualization workloads.