NVIDIA DGX B200

The foundation for your AI center of excellence.

NVIDIA DGX SuperPOD

Purpose-Built for the Unique Demands of AI

NVIDIA DGX SuperPOD

Purpose-Built for the Unique Demands of AI

Revolutionary Performance Backed by Evolutionary Innovation

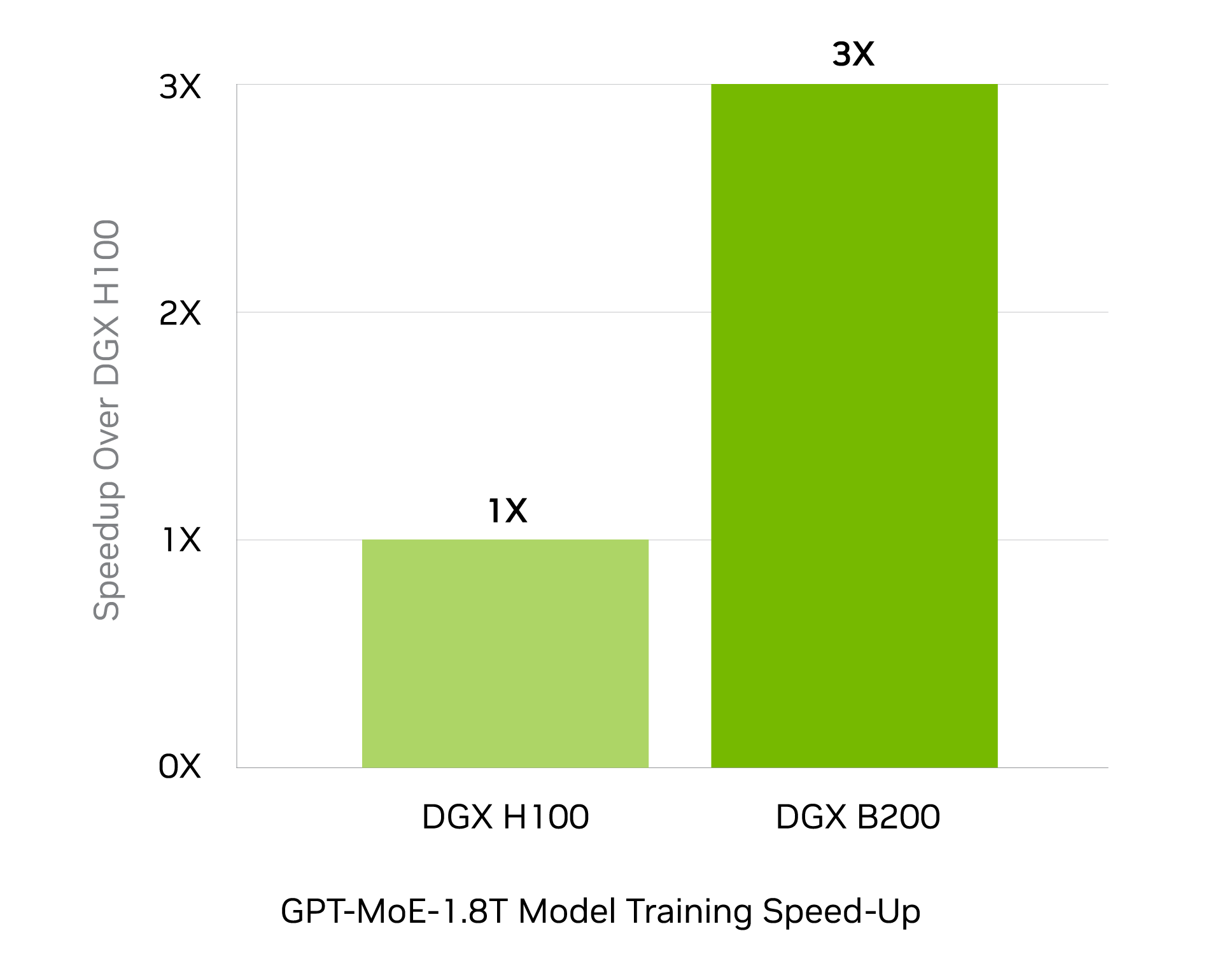

NVIDIA DGX B200 is an unified AI platform for develop-to-deploy pipelines for businesses of any size at any stage in their AI journey. Equipped with eight NVIDIA Blackwell GPUs interconnected with fifth-generation NVIDIA NVLink, DGX B200 delivers leading-edge performance, offering 3X the training performance and 15X the inference performance of previous generations. Leveraging the NVIDIA Blackwell GPU architecture, DGX B200 can handle diverse workloads—including large language models, recommender systems, and chatbots—making it ideal for businesses looking to accelerate their AI transformation.

A Unified AI Platform

One Platform for Develop-to-Deploy Pipelines

Enterprises require massive amounts of compute power to handle complex AI datasets at every stage of the AI pipeline, from training to fine-tuning to inference. With NVIDIA DGX B200, enterprises can arm their developers with a single platform built to accelerate their workflows.

Powerhouse of AI Peformance

Powered by the NVIDIA Blackwell architecture’s advancements in computing, DGX B200 delivers 3X the training performance and 15X the inference performance of DGX H100. As the foundation of NVIDIA DGX BasePOD and NVIDIA DGX SuperPOD, DGX B200 delivers leading-edge performance for any workload.

Proven Infrastructure Standard

DGX B200 is a fully optimized hardware and software platform that includes the complete NVIDIA AI software stack, including NVIDIA Base Command and NVIDIA AI Enterprise software, a rich ecosystem of third-party support, and access to expert advice from NVIDIA professional services.

Next-Generation Performance Powered by DGX B200

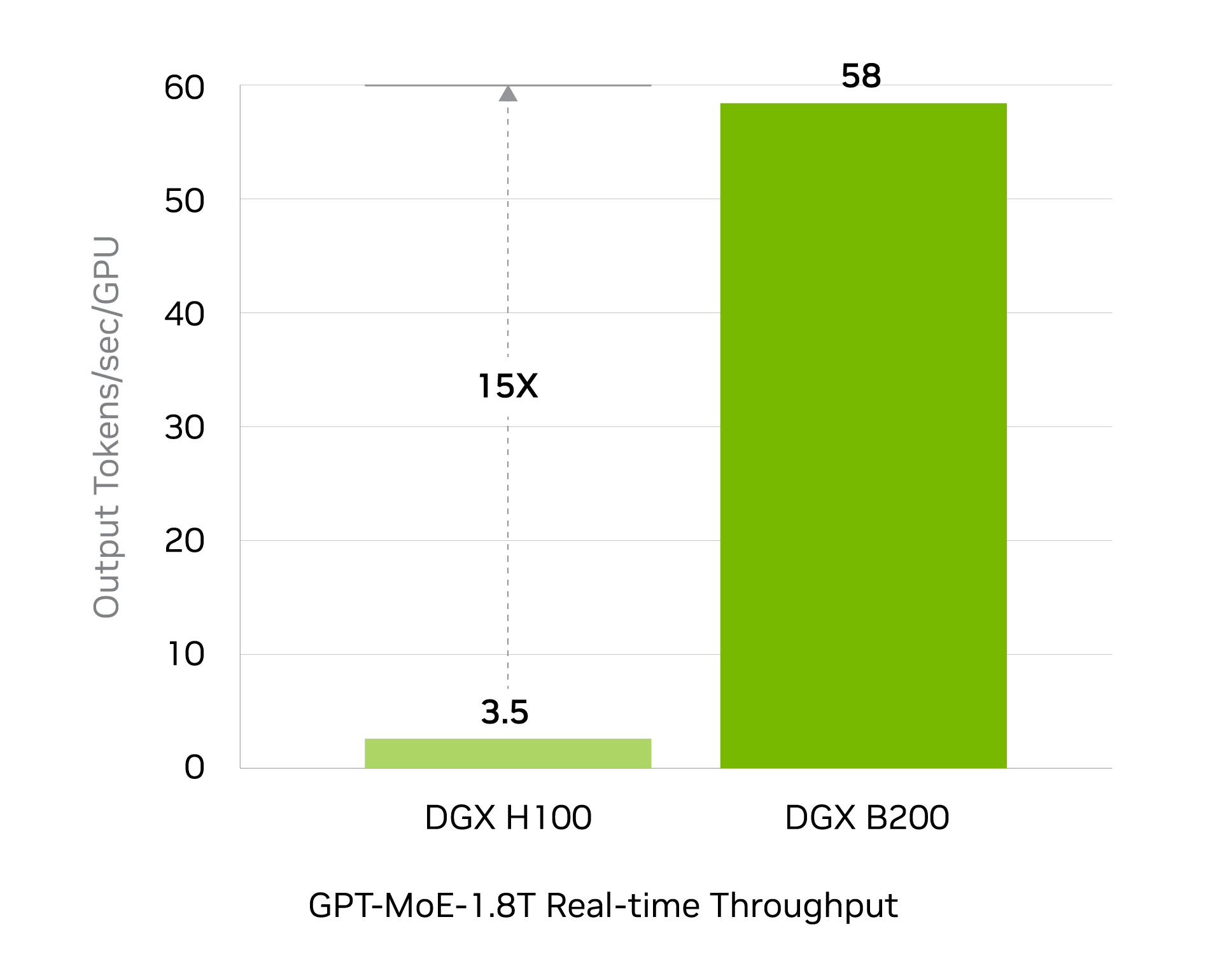

Real Time Large Language Model Inference

Supercharged AI Training Performance

Projected performance subject to change. Token-to-token latency (TTL) = 50ms real time, first token latency (FTL) = 5s, input sequence length = 32,768, output sequence length = 1,028, 8x eight-way DGX H100 GPUs air-cooled vs. 1x eight-way DGX B200 air-cooled, per GPU performance comparison.

Projected performance subject to change. 32,768 GPU scale, 4,096x eight-way DGX H100 air-cooled cluster: 400G IB network, 4,096x 8-way DGX B200 air-cooled cluster: 400G IB network.

NVIDIA DGX B200 Specifications

| GPU | 8x NVIDIA Blackwell GPUs |

|---|---|

| GPU Memory | 1,440GB total GPU memory |

| Performance | 72 petaFLOPS training and 144 petaFLOPS inference |

| Power Consumption | ~14.3kW max |

| CPU | 2 Intel® Xeon Platinum 8570 Processors 112 Cores total, 2.1 GHz (Base), 4 GHz (Max Boost) |

| System Memory | Up to 4TB |

| Networking | 4x OSFP ports serving 8x single-port NVIDIA ConnectX-7 VPI Up to 400Gb/s InfiniBand/Ethernet 2x dual-port QSFP112 NVIDIA BlueField-3 DPU Up to 400Gb/s InfiniBand/Ethernet |

| Management Network | 10Gb/s onboard NIC with RJ45 100Gb/s dual-port ethernet NIC Host baseboard management controller (BMC) with RJ45 |

| Storage | OS: 2x 1.9TB NVMe M.2 Internal storage: 8x 3.84TB NVMe U.2 |

| Software | NVIDIA AI Enterprise: Optimized AI Software NVIDIA Base Command: Orchestration, Scheduling, and Cluster Management DGX OS / Ubuntu: Operating system |

| Rack Units (RU) | 10 RU |

| System Dimensions | Height: 17.5in (444mm) Width: 19.0in (482.2mm) Length: 35.3in (897.1mm |

| Operating Temperature | 5–30°C (41–86°F) |

| Enterprise Support | Three-year Enterprise Business-Standard Support for hardware and software 24/7 Enterprise Support portal access Live agent support during local business hours |

Built from the ground up for enterprise AI, the NVIDIA DGX platform combines the best of NVIDIA software, infrastructure, and expertise in a modern, unified AI development solution, available in the cloud or on-premises.