NVIDIA GRACE CPU

Purpose-built to solve the world’s largest computing problems.

Accelerating the Largest AI, HPC, Cloud, and Hyperscale Workloads

AI models are exploding in complexity and size as they improve conversational AI with hundreds of billions of parameters, enhance deep recommender systems containing tens of terabytes of data, and enable new scientific discoveries. These massive models are pushing the limits of today’s systems. Continuing to scale them for accuracy and usefulness requires fast access to a large pool of memory and a tight coupling of the CPU and GPU.

Products

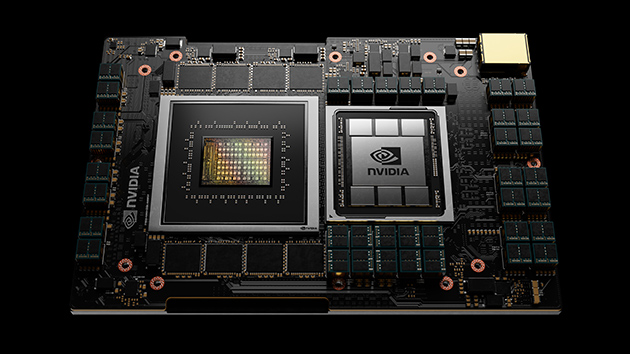

NVIDIA Grace Hopper Superchip

NVIDIA Grace Hopper Superchip combines the Grace and Hopper architectures using NVIDIA® NVLink™-C2C to deliver a CPU+GPU coherent memory model for accelerated AI and high performance computing (HPC) applications.

- CPU+GPU designed for giant-scale AI and HPC

- New 900 gigabytes per second (GB/s) coherent interface, 7X faster than PCIe Gen 5

- 30X higher aggregate system memory bandwidth to GPU compared to DGX A100

- Runs all NVIDIA software stacks and platforms, including NVIDIA HPC, NVIDIA AI, and NVIDIA Omniverse™

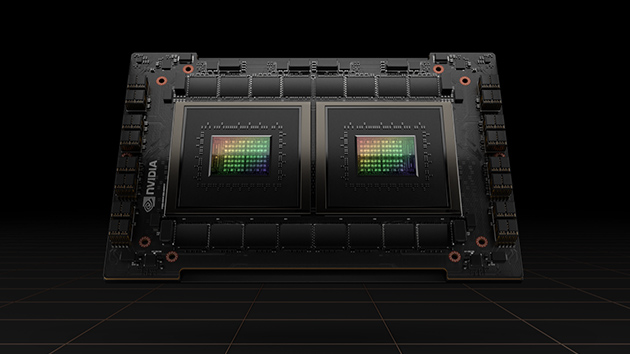

NVIDIA Grace CPU Superchip

NVIDIA Grace CPU Superchip uses the NVLink-C2C technology to deliver 144 Arm® v9 cores and 1 TB/s of memory bandwidth.

- High performance CPU for HPC and cloud computing

- Super chip design with up to 144 Arm v9 CPU cores

- World’s first LPDDR5x with ECC Memory, 1TB/s total bandwidth

- SPECrate®2017_int_base over 740 (estimated)

- 900 GB/s coherent interface, 7X faster than PCIe Gen 5

- 2X the packaging density of DIMM-based solutions

- 2X the performance per watt of today’s leading CPU

- Runs all NVIDIA software stacks and platforms, including NVIDIA RTX, HPC, NVIDIA AI, and NVIDIA Omniverse

Watch NVIDIA founder and CEO Jensen Huang unveil the NVIDIA Grace CPU Superchip during his keynote.

The Latest Technical Innovations

Fourth-Generation NVIDIA NVLink-C2C

Solving the largest AI and HPC problems requires both high-capacity and high-bandwidth memory (HBM). The fourth-generation NVIDIA NVLink-C2C delivers 900 GB/s of bidirectional bandwidth between the NVIDIA Grace CPU and NVIDIA GPUs. The connection provides a unified, cache-coherent memory address space that combines system and HBM GPU memory for simplified programmability. This coherent, high-bandwidth connection between CPU and GPUs is key to accelerating tomorrow’s most complex AI and HPC problems.

New High-Bandwidth Memory Subsystem Using LPDDR5x with ECC

Memory bandwidth is a critical factor in server performance and standard double data rate (DDR) memory consumes a significant portion of overall socket power. The NVIDIA Grace CPU is the first server CPU to harness LPDDR5x memory with server-class reliability through mechanisms like error-correcting code (ECC) to meet the demands of the data center, while delivering 2X the memory bandwidth and up to 10X better energy efficiency compared to today’s server memory. The NVIDIA Grace LPDDR5x solution coupled with the large, high-performance, last-level cache delivers the bandwidth necessary for large models while reducing system power to maximize performance for the next generation of workloads.

Next-Generation Arm v9 Cores

As the parallel compute capabilities of GPUs continue to advance, workloads can still be gated by serial tasks run on the CPU. A fast and efficient CPU is a critical component of system design to enable maximum workload acceleration. The NVIDIA Grace CPU integrates next-generation Arm v9 cores to deliver high performance in a power-efficient design, making it easier for scientists and researchers to do their life’s work.